A regular filesystem is not intended to be mounted on more than one server at a time. And doing otherwise can lead to serious inconsistencies, damaging its logical structure. For instance, being unaware of each other’s activities, two servers may try allocating the same block of storage to different files, relying on the information about free blocks loaded into their memory. Or certain blocks may already be modified by one server, and others will ignore this fact and use the outdated content instead. Such a problem can be addressed by using a clustered filesystem.

A clustered filesystem can be mounted on multiple servers at once, while being accessed by them on the block level and managed as a unified entity. It puts together the available storage capacity and shares it between the servers. At the same time, discrepancies are eliminated, since each server stays in sync with the actual filesystem state, as if all their applications were running on the same machine.

The clustered filesystem itself coordinates input/output operations and may lock them to avoid the so-called collisions.

There are several clustered file systems, including OCFS2.

Share a block storage on OCI

- create a block volume (for instance here volNicoTest)

- create or choose 3 VM instances that will share this block volume (here instance-nicotest2, instance-nicotest3, and instance-nicotest4, with Oracle Linux 8.6 as an OS)

- Attach this block volume to each instances :

- Use the OCI console to manually attach the volume to each instance using the option “read/write shareable”

- ssh on each instance in order to execute the iSCSI attach commands provided by OCI. You can find these commands on each link “volume – instance” via the 3 dots menu

- Now your volNicoTest volume is attached to each instances!

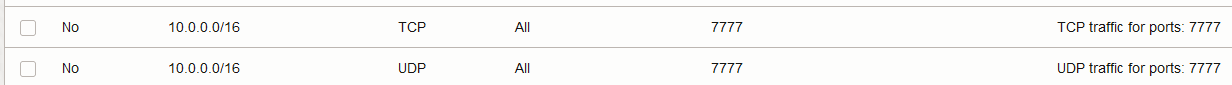

- Create 2 ingress rules in the subnet of your instances:

And on each instance authorize traffic on the 7777 port:

firewall-cmd --add-port=7777/tcp --add-port=7777/udp --permanent

firewall-cmd --reload Now consider you have the following instances with private ip:

- instance-nicotest2, 10.0.6.199

- instance-nicotest3, 10.0.6.239

- instance-nicotest4, 10.0.6.100

The following operations need to be executed on each instances:

firewall-cmd --add-port=7777/tcp --add-port=7777/udp --permanent

firewall-cmd --reload

dnf install ocfs2-tools

o2cb add-cluster ocfs2

# ocfs2 is the name you choose for your cluster, you can give it a different name

# this command cause the file /etc/ocfs2/cluster.conf to be created

/sbin/o2cb.init configure The /sbin/o2cb.init configure you have to choose as follow (on each instance it’s the same):

[root@instance-nicotest2 ~]# /sbin/o2cb.init configure

Configuring the O2CB driver.

This will configure the on-boot properties of the O2CB driver.

The following questions will determine whether the driver is loaded on

boot. The current values will be shown in brackets ('[]'). Hitting

<ENTER> without typing an answer will keep that current value. Ctrl-C

will abort.

Load O2CB driver on boot (y/n) [n]: y

Cluster stack backing O2CB [o2cb]:

Cluster to start on boot (Enter "none" to clear) [ocfs2]: ocfs2

Specify heartbeat dead threshold (>=7) [31]:

Specify network idle timeout in ms (>=5000) [30000]:

Specify network keepalive delay in ms (>=1000) [2000]:

Specify network reconnect delay in ms (>=2000) [2000]:

Writing O2CB configuration: OK

checking debugfs...

Loading stack plugin "o2cb": OK

Loading filesystem "ocfs2_dlmfs": OK

Creating directory '/dlm': OK

Mounting ocfs2_dlmfs filesystem at /dlm: OK

Setting cluster stack "o2cb": OK

Registering O2CB cluster "ocfs2": OK

Setting O2CB cluster timeouts : OK

→ cela a généré le fichier /etc/sysconfig/o2cbStill on each nodes execute the following commands:

service o2cb start

service ocfs2 start

systemctl enable o2cb

systemctl enable ocfs2

sysctl -w kernel.panic=30

sysctl -w kernel.panic_on_oops=1

echo "kernel.panic=30" >> /etc/sysctl.d/99-yourcompanyname.conf

echo "kernel.panic_on_oops=1" >> /etc/sysctl.d/99-yourcompanyname.conf

o2cb register-cluster ocfs2 The following commands must be executed on only one instance, for instance on instance-nicotest2:

o2cb add-node ocfs2 instance-nicotest2 --ip 10.0.6.199

o2cb add-node ocfs2 instance-nicotest3 --ip 10.0.6.239

o2cb add-node ocfs2 instance-nicotest4 --ip 10.0.6.100These commands fill the cluster conf file /etc/ocfs2/cluster.conf

[root@instance-nicotest2 ~]# cat /etc/ocfs2/cluster.conf

cluster:

heartbeat_mode = local

node_count = 3

name = ocfs2

node:

number = 0

cluster = ocfs2

ip_port = 7777

ip_address = 10.0.6.199

name = instance-nicotest2

node:

number = 1

cluster = ocfs2

ip_port = 7777

ip_address = 10.0.6.239

name = instance-nicotest3

node:

number = 2

cluster = ocfs2

ip_port = 7777

ip_address = 10.0.6.100

name = instance-nicotest4Copy the content of /etc/ocfs2/cluster.conf from instance-nicotest2 to /etc/ocfs2/cluster.conf in instance-nicotest3, and instance-nicotest4

And then restart o2cb and ocfs2 services on instance-nicotest3 and instance-nicotest4:

service o2cb restart

service ocfs2 reload

o2cb start-heartbeat ocfs2Now we have to format our shared storage with ocfs2, we do this only once on one of these instance, for instance on instance-nicotest2:

mkfs.ocfs2 -L "my_ocfs2_vol" /dev/sdb -N 32

# Take some time on "Formatting Journals:" , this is the time for disk format

# my_ocfs2_vol : is just a label we chose

# /dev/sdb is your disk, or if you have a partition on this disk, maybe you have /dev/sdb1. But a partition is not mandatory, it depends on your needWhen you create the file system, it is important to consider the number of node slots you will need now and also in the future. This controls the number of nodes that can concurrently mount a volume. While you can add node slots in the future, there is a performance impact that may occur if they are added later due to the possibility of the slots being added at the far edge of the disk platter.

-N 32 reserves 32 slots for potential 32 nodes

[root@instance-nicotest2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 46.6G 0 disk

├─sda1 8:1 0 100M 0 part /boot/efi

├─sda2 8:2 0 1G 0 part /boot

└─sda3 8:3 0 45.5G 0 part

├─ocivolume-root 252:0 0 35.5G 0 lvm /

└─ocivolume-oled 252:1 0 10G 0 lvm /var/oled

sdb 8:16 0 70G 0 disk Now we identifiy the uuid of this disk with the blkid command:

[root@instance-nicotest2 ~]# blkid

/dev/sda1: SEC_TYPE="msdos" UUID="2314-8847" BLOCK_SIZE="512" TYPE="vfat" PARTLABEL="EFI System Partition" PARTUUID="ds454dsf456fd-b1a6-436a-87e0-ds454dsf456fd"

/dev/sda2: UUID="ds454dsf456fd-d067-48a1-84c0-ds454dsf456fd" BLOCK_SIZE="4096" TYPE="xfs" PARTUUID="f3ab92a7-63c7-479d-a813-0b2afcd9a7ff"

/dev/sda3: UUID="ds454dsf456fd-49vg-4Ces-d456-SDSQD-SDSQD-nLQwgd" TYPE="LVM2_member" PARTUUID="ds454dsf456fd-903e-4fdc-bf40-ds454dsf456fd"

/dev/mapper/ocivolume-root: UUID="b7dfdfdf-adfdf2-dfdfdf-a47e-df45dfs45" BLOCK_SIZE="4096" TYPE="xfs"

/dev/mapper/ocivolume-oled: UUID="df45dfs45-adfdf2-dfdfdf-a47e-df45dfs45" BLOCK_SIZE="4096" TYPE="xfs"

/dev/sdb: LABEL="my_ocfs2_vol" UUID="f985240d-f83c-493c-871e-d0ec89a6a529" BLOCK_SIZE="4096" TYPE="ocfs2" Now on each node:

mkdir /sharedstorage

echo "UUID=f985240d-f83c-493c-871e-d0ec89a6a529 /sharedstorage ocfs2 _netdev,defaults 0 0" >> /etc/fstab

mount -aCan’t mount? This node could not connect to nodes

dmesg -T

[Thu Oct 12 12:37:54 2023] o2net: Connection to node instance-nicotest2 (num 0) at 10.0.6.199:7777 shutdown, state 7

[Thu Oct 12 12:37:55 2023] o2cb: This node could not connect to nodes:

[Thu Oct 12 12:37:55 2023] 0

[Thu Oct 12 12:37:55 2023] .

[Thu Oct 12 12:37:55 2023] o2cb: Cluster check failed. Fix errors before retrying.

[Thu Oct 12 12:37:55 2023] (mount.ocfs2,59941,1):ocfs2_dlm_init:3355 ERROR: status = -107

[Thu Oct 12 12:37:55 2023] (mount.ocfs2,59941,0):ocfs2_mount_volume:1803 ERROR: status = -107

[Thu Oct 12 12:37:55 2023] (mount.ocfs2,59941,0):ocfs2_fill_super:1177 ERROR: status = -107

In this case you need to restart o2cb and ocfs2 services

Add a node to your clustered filesystem

For instance, if you have a new instance-nicotest5 with private ip 10.0.6.200, then you should ssh to this instance and:

firewall-cmd --add-port=7777/tcp --add-port=7777/udp --permanent

firewall-cmd --reload

dnf install ocfs2-tools

o2cb add-cluster ocfs2 #Copy # /etc/ocfs2/cluster.conf from another instance.

o2cb add-node ocfs2 instance-nicotest5 --ip 10.0.6.200

/sbin/o2cb.init configure

service o2cb start

service ocfs2 start

systemctl enable o2cb

systemctl enable ocfs2

echo "kernel.panic=30" >> /etc/sysctl.d/99-novrh.conf

echo "kernel.panic_on_oops=1" >> /etc/sysctl.d/99-novrh.conf

o2cb register-cluster ocfs2

mkdir /sharedstorage

echo "UUID=f985240d-f83c-493c-871e-d0ec89a6a529 /sharedstorage ocfs2 _netdev,defaults 0 0" >> /etc/fstab

mount -aAnd on each other instance:

o2cb add-node ocfs2 instance-nicotest5 --ip 10.0.6.200

service o2cb restart

service ocfs2 restartIncrease your shared volume size

Go to OCI console, and list the block volumes. Modify your volume size.

OCI give you rescan commands that you must execute on each instances:

sudo dd iflag=direct if=/dev/oracleoci/oraclevd<paste device suffix here> of=/dev/null count=1

echo "1" | sudo tee /sys/class/block/`readlink /dev/oracleoci/oraclevd<paste device suffix here> | cut -d'/' -f 2`/device/rescanTo replace <paste device suffix here> just find it with:

[root@instance-nicotest2 ~]# ll /dev/oracleoci/oraclevd*

lrwxrwxrwx. 1 root root 6 Oct 13 12:30 /dev/oracleoci/oraclevda -> ../sdb

lrwxrwxrwx. 1 root root 7 Oct 6 13:22 /dev/oracleoci/oraclevda1 -> ../sda1

lrwxrwxrwx. 1 root root 7 Oct 6 13:22 /dev/oracleoci/oraclevda2 -> ../sda2

lrwxrwxrwx. 1 root root 7 Oct 6 13:22 /dev/oracleoci/oraclevda3 -> ../sda3Grow your filesystem:

tunefs.ocfs2 -S /dev/sdb

# or tunefs.ocfs2 --volume-size /dev/sdbbe careful, lsblk doesn’t show if your filesystem has grown, you must use df command:

[root@instance-nicotest2 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 4.7G 0 4.7G 0% /dev

tmpfs 4.8G 0 4.8G 0% /dev/shm

tmpfs 4.8G 97M 4.7G 2% /run

tmpfs 4.8G 0 4.8G 0% /sys/fs/cgroup

/dev/mapper/ocivolume-root 36G 9.3G 27G 27% /

/dev/sda2 1014M 314M 701M 31% /boot

/dev/sda1 100M 5.1M 95M 6% /boot/efi

/dev/mapper/ocivolume-oled 10G 122M 9.9G 2% /var/oled

tmpfs 967M 0 967M 0% /run/user/0

tmpfs 967M 0 967M 0% /run/user/987

tmpfs 967M 0 967M 0% /run/user/1000

/dev/sdb 100G 2.1G 98G 2% /sharedstorage

Leave a Reply